I saw the impressive compression site which lists lossless compression algorithms properties on different cpus and data sets.

I wanted to do something similar myself, on a particular platform and data set, trying to minimize the disk usage of a daily backup job, within reasonable cpu bounds.

The system is a AMD Ryzen 7 3800XT running Ubuntu 18.04, all programs installed through Ubuntu official sources with apt. I want to utilize all cores, so all algorithms were run with -threads=0 or similar, to allow them using all the cores.

The data is text dumps from InfluxDb, a very repetitive data set. I had 50 GB of uncompressed data on a fast, unencrypted nvme ssd.

I tried lz4, pigz, zstd, brotli, bzip2, xz, and zpaq (lrzip).

All were set to use all cpu cores if possible. I measured wall clock time (seconds) and compression ratio (1x being no gain at all). The command line I used was

/usr/bin/time --format="%U\t%s\t%e\t%M\t%x\t%C"The raw data are available here.

These are methods which are best for some various definitions of best:

| Method | Wall clock | Compression ratio | Comment |

|---|---|---|---|

| zstd -5 | 58.1 | 11.6 | Best bang for the buck |

| zstd -19 | 1471 | 21.1 | Best compression |

| zstd -4 | 57.3 | 10.9 | Fastest |

| zstd -8 | 81,3 | 12.1 | Fastest over 12x |

| xz -4 | 374 | 16.0 | Fastest over 15x |

| lz4 -1 | 58.2 | 6.9 | Lowest user mode (total cpu effort) |

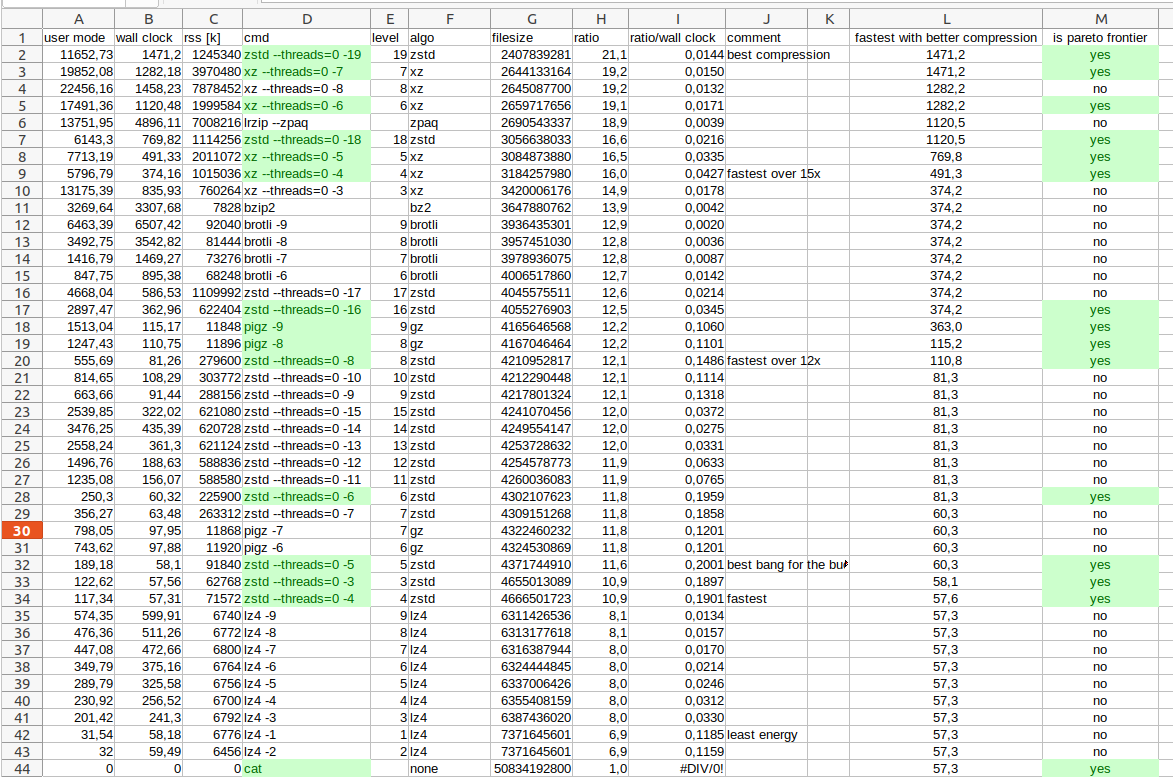

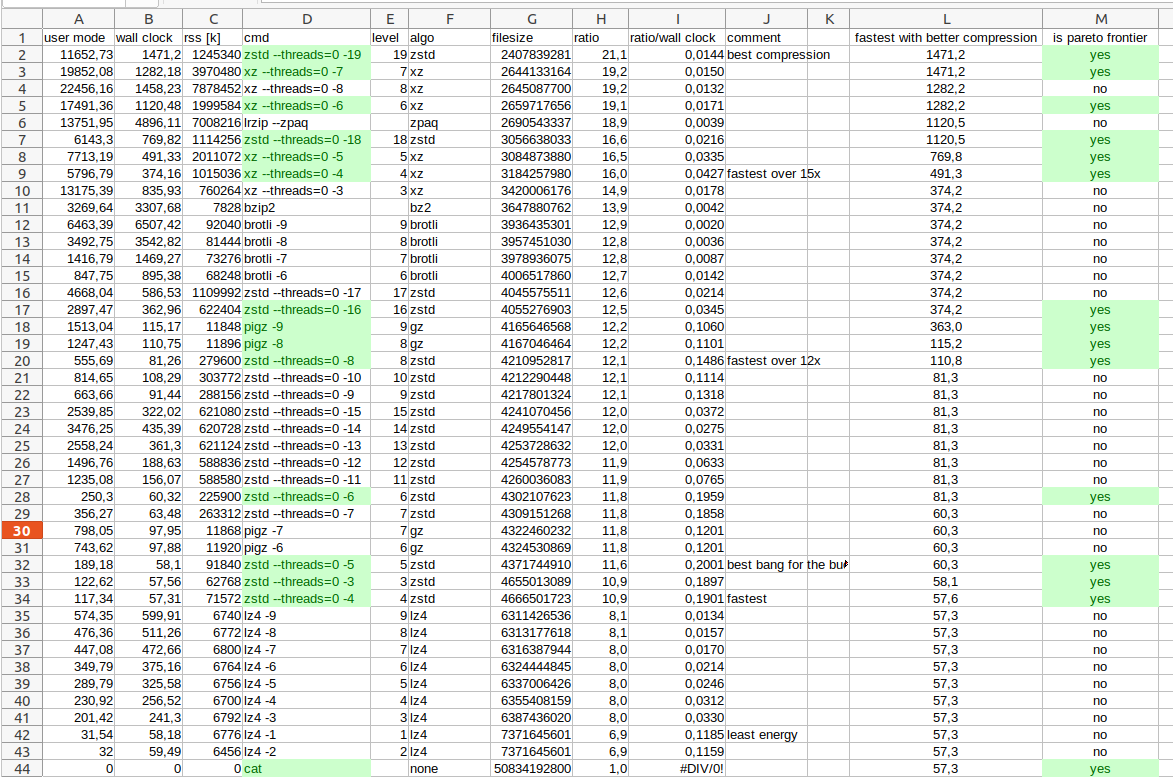

Here is an image showing the results sorted on compression ratio, with the elements being part of the pareto frontier highlighted with green background colour.

For reference, here are some of the other methods which were not best in any sense:

| Method | Wall clock | Compression ratio | Comment |

|---|---|---|---|

| pigz -6 | 97.9 | 11.8 | |

| brotli -6 | 895 | 12.7 | |

| bzip2 | 3308 | 13.9 | |

| zpaq | 4896 | 18.9 |

The conclusion is that zstd is very flexible to tune between fast and highly compressing.

In the end, I decided to use xz -6 which gave 19.1x in 1120 seconds, the maximum I think is reasonable for this particular backup job to take.